Mojibake: Question Marks, Strange Characters and Other Issues.

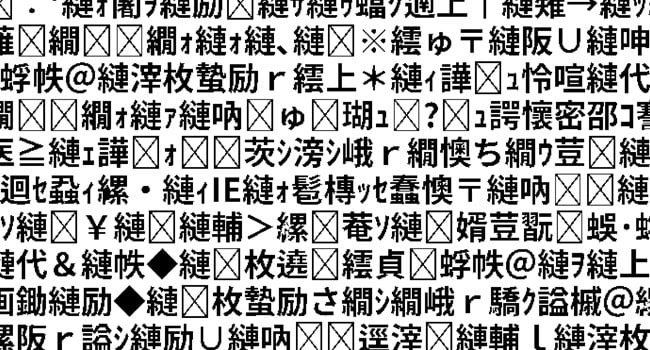

Have you ever found strange characters like these ��� when viewing content in applications or websites in other languages? What are these and where do they come from?

Finding these strange, apparently misplaced characters is most likely the result of encoding issues and can be a headache. This occurs many times in the localization field as content crosses borders, platforms and languages.

Character Encoding

Everything on a computer is a number. If we want to have letters on computers, we must all agree on which number corresponds to which letter. This is called “character encoding”.

Character encoding refers to how “character sets” for different languages are assigned to computers. They are defined in “code pages” or “character maps”. These tables combine characters with specific sequences of ones and zeros.

The simplest encoding is called ASCII. ASCII characters are stored using only 7 bits, which means that there are only 27 = 128 characters possible.

ASCII works great for encoding basic characters in English/Latin, but there are over 128 characters in the world!

Things got complicated when Asian languages and computers met. In some languages, such as Chinese for example, you have up to 60,000 different characters. This is where 16-bit encoding schemes appear, giving the ability to store up to 64,000 characters.

Japan, for example, does not use ASCII and simply created its own encodings, having up to 4 simultaneously. All of them incompatible with each other. So, if for example, you sent a document from one Japanese computer to another computer with the incorrect encoding, the text would be broken.

The Japanese have a term for this phenomenon: Mojibake

Some languages affected by mojibake:

- Arabic

- French

- English: It may appear in some characters such as em dashes (—) or dashes (-). It rarely affects the characters of the alphabet.

- Japanese

- Chinese: In Chinese, this phenomenon is called 亂碼(Luàn mǎ) or ‘chaotic code’.

- Languages based on Cyrillic alphabet: Mojibake also affects languages such as Russian, Ukrainian, Belarusian or Tajik. In languages such as Bulgarian, mojibake is also translated as “monkey’s alphabet” and in Serbian is known as “garbage”.

- Polish

- Nordic languages: Mojibake affects Nordic languages although it is not common. Finnish and Swedish use the same alphabet as the English alphabet, with three new letters: å, ä and ö.

- Spanish: Mojibake in Spanish can be translated as “deformation” and the same happens as in the Nordic languages: Spanish uses 26 standard Latin characters but at the same time includes letters such as ñ, accents and sometimes ü. These characters, because they are not available in ASCII, are displayed incorrectly.

| Spanish example | Spanish text: Señalización | |

| File encoding | Setting in browser | Result |

| Windows-1256 | ISO 8859-1 | Seأ±alizaciأ³n |

| ISO 8859-1 | Mac Roman | Señalización |

| UTF-8 | ISO 8859-1 | Seöalización |

| UTF-8 | Mac Roman | Se•alizaci³n |

Unicode to the rescue

Finally, someone had enough and decided to create a standard to unify all coding standards. This standard is called Unicode and is not an actual encoding, but a character set.

Unicode has a code space of 1,114,112 possible positions. Sufficient for languages like Arabic, Russian, Japanese, Korean, Chinese, European languages, etc. and even for characters that do not exist. By using Unicode you can write a document in any language.

UTF-8 or UTF-32?

UTF-32 is a Unicode character encoding that uses a 32-bit number for each character. This makes a lot of sense, but it wastes a lot of space. An English document would occupy 4 times as much as it should.

Why do we need such a large number to contain 1,112,064 values, when most of the time we will only use the first 128 values?

UTF-8 is a “variable-length encoding”. That means most of the time, each character only takes up 8 bits, but can expand up to 32 bits if necessary. This system can support any Unicode character without wasting space, which has made it the most popular character encoding.

Conclusion

Today the most used standard is UTF-8 since it can encode any character and is backward compatible with ASCII. In addition, UTF-8 is relatively efficient in terms of space, which makes it the most efficient encoding standard for most cases.

Making sure your application is “localization ready” is key to developing applications that can be easily localized. Supporting different character encodings and choosing the right encoding are two crucial steps in localization projects, either for websites or software localization.